🍏 Nextflow Workflow 🍏

Why nextflow?

Nextflow helps Autometa produce reproducible results while allowing the pipeline to scale across different platforms and hardware.

System Requirements

Currently the nextflow pipeline requires Docker 🐳 so it must be installed on your system. If you don’t have Docker installed you can install it from docs.docker.com/get-docker. We plan on removing this dependency in future versions, so that other dependency managers (e.g. Conda, Singularity, etc) can be used.

Nextflow runs on any Posix compatible system. Detailed system requirements can be found in the nextflow documentation

Nextflow (required) and nf-core tools (optional but highly recommended) installation will be discussed in Installing Nextflow and nf-core tools with Conda.

Data Preparation

Metagenome Assembly

You will first need to assemble your shotgun metagenome, to provide to Autometa as input.

The following is a typical workflow for metagenome assembly:

Trim adapter sequences from the reads

We usually use Trimmomatic.

Quality check the trimmed reads to ensure the adapters have been removed

We usually use FastQC.

Assemble the trimmed reads

We usually use MetaSPAdes which is a part of the SPAdes package.

Check the quality of your assembly (Optional)

We usually use metaQuast for this (use

--min-contig 1option to get an accurate N50).

This tool can compute a variety of assembly statistics one of which is N50. This can often be useful for selecting an appropriate length cutoff value for pre-processing the metagenome.

Preparing a Sample Sheet

An example sample sheet for three possible ways to provide a sample as an input is provided below. The first example provides a metagenome with paired-end read information, such that contig coverages may be determined using a read-based alignment sub-workflow. The second example uses pre-calculated coverage information by providing a coverage table with the input metagenome assembly. The third example retrieves coverage information from the assembly contig headers (Currently, this is only available with metagenomes assembled using SPAdes)

Attention

If you have paired-end read information, you can supply these file paths within the sample sheet and the coverage

table will be computed for you (See example_1 in the example sheet below).

If you have used any other assembler, then you may also provide a coverage table (See example_2 in the example sheet below).

Fortunately, Autometa can construct this table for you with: autometa-coverage.

Use --help to get the complete usage or for a few examples see 2. Coverage calculation.

If you use SPAdes then Autometa can use the k-mer coverage information in the contig names (example_3 in the example sample sheet below).

sample |

assembly |

fastq_1 |

fastq_2 |

coverage_tab |

cov_from_assembly |

|---|---|---|---|---|---|

example_1 |

/path/to/example/1/metagenome.fna.gz |

/path/to/paired-end/fwd_reads.fastq.gz |

/path/to/paired-end/rev_reads.fastq.gz |

0 |

|

example_2 |

/path/to/example/2/metagenome.fna.gz |

/path/to/coverage.tsv |

0 |

||

example_3 |

/path/to/example/3/metagenome.fna.gz |

spades |

Note

To retrieve coverage information from a sample’s contig headers, provide the assembler used for the sample value in the row under the cov_from_assembly column.

Using a 0 will designate to the workflow to try to retrieve coverage information from the coverage table (if it is provided)

or coverage information will be calculated by read alignments using the provided paired-end reads. If both paired-end reads and a coverage table are provided,

the pipeline will prioritize the coverage table.

If you are providing a coverage table to coverage_tab with your input metagenome, it must be tab-delimited and contain at least the header columns, contig and coverage.

Supported Assemblers for cov_from_assembly

Assembler |

Supported (Y/N) |

|

|---|---|---|

[meta]SPAdes |

Y |

|

Unicycler |

N |

|

Megahit |

N |

|

You may copy the below table as a csv and paste it into a file to begin your sample sheet. You will need to update your input parameters, accordingly.

Example sample_sheet.csv

sample,assembly,fastq_1,fastq_2,coverage_tab,cov_from_assembly

example_1,/path/to/example/1/metagenome.fna.gz,/path/to/paired-end/fwd_reads.fastq.gz,/path/to/paired-end/rev_reads.fastq.gz,,0

example_2,/path/to/example/2/metagenome.fna.gz,,,/path/to/coverage.tsv,0

example_3,/path/to/example/3/metagenome.fna.gz,,,,spades

Caution

Paths to any of the file inputs must be absolute file paths.

Incorrect |

Correct |

Description |

|---|---|---|

|

|

Replacing any instance of the |

|

|

Using the entire file path of the input |

Basic

While the Autometa Nextflow pipeline can be run using Nextflow directly, we designed it using nf-core standards and templating to provide an easier user experience through use of the nf-core “tools” python library. The directions below demonstrate using a minimal Conda environment to install Nextflow and nf-core tools and then running the Autometa pipeline.

Installing Nextflow and nf-core tools with Conda

If you have not previously installed/used Conda, you can get it using the Miniconda installer appropriate to your system, here: https://docs.conda.io/en/latest/miniconda.html

After installing conda, running the following command will create a minimal Conda environment named “autometa-nf”, and install Nextflow and nf-core tools.

conda env create --file=https://raw.githubusercontent.com/KwanLab/Autometa/main/environment.yml

If you receive the message…

CondaValueError: prefix already exists:

…it means you have already created the environment. If you want to overwrite/update

the environment then add the --force flag to the end of the command.

conda env create --file=https://raw.githubusercontent.com/KwanLab/Autometa/main/environment.yml --force

Once Conda has finished creating the environment be sure to activate it:

conda activate autometa-nf

Using nf-core

Download/Launch the Autometa Nextflow pipeline using nf-core tools. The stable version of Autometa will always be the “main” git branch. To use an in-development git branch switch “main” in the command with the name of the desired branch. After the pipeline downloads, nf-core will start the pipeline launch process.

nf-core launch KwanLab/Autometa -r main

You will then be asked to choose “Web based” or “Command line” for selecting/providing options. While it is possible to use the command line version, it is preferred and easier to use the web-based GUI. Use the arrow keys to select one or the other and then press return/enter.

Setting parameters with a web-based GUI

The GUI will present all available parameters, though some extra parameters may be hidden (these can be revealed by selecting “Show hidden params” on the right side of the page).

Required parameters

The first required parameter is the input sample sheet for the Autometa workflow, specified using --input. This is the path to your input sample sheet.

See Preparing a Sample Sheet for additional details.

The other parameter is a nextflow argument, specified with -profile. This configures nextflow and the Autometa workflow as outlined in the respective

“profiles” section in the pipeline’s nextflow.config file.

standard(default): runs all process jobs locally, (currently this requires Docker, i.e. docker is enabled for all processes the default profile).

slurm: submits all process jobs into the slurm queue. See SLURM before using

docker: enables docker for all processes

Caution

Additional profiles exists in the nextflow.config file, however these have not yet been tested. If you

are able to successfully configure these profiles, please get in touch or submit a pull request and we will add these configurations

to the repository.

conda: Enables running all processes using condasingularity: Enables running all processes using singularitypodman: Enables running all processes using podmanshifter: Enables running all processes using shiftercharliecloud: Enables running all processes using charliecloud

Caution

Notice the number of hyphens used between --input and -profile. --input is an Autometa workflow parameter

where as -profile is a nextflow argument. This difference in hyphens is true for passing in all arguments to the Autometa

workflow and nextflow, respectively.

Running the pipeline

After you are finished double-checking your parameter settings, click “Launch”

at the top right of web based GUI page, or “Launch workflow” at the bottom of

the page. After returning to the terminal you should be provided the option

Do you want to run this command now? [y/n] enter y to begin the pipeline.

Note

This process will lead to nf-core tools creating a file named nf-params.json.

This file contains your specified parameters that differed from the pipeline’s defaults.

This file can also be manually modified and/or shared to allow reproducible configuration

of settings (e.g. among members within a lab sharing the same server).

Additionally all Autometa specific pipeline parameters can be used as command line arguments

using the nextflow run ... command by prepending the parameter name with two hyphens

(e.g. --outdir /path/to/output/workflow/results)

Caution

If you are restarting from a previous run, DO NOT FORGET to also add the -resume flag to the nextflow run command.

Notice only 1 hyphen is used with the -resume nextflow parameter!

Advanced

Parallel computing and computer resource allotment

While you might want to provide Autometa all the compute resources available in order to get results faster, that may or may not actually achieve the fastest run time.

Within the Autometa pipeline, parallelization happens by providing all the assemblies at once to software that internally handles parallelization.

The Autometa pipeline will try and use all resources available to individual pipeline modules. Each module/process has been pre-assigned resource allotments via a low/medium/high tag. This means that even if you don’t select for the pipeline to run in parallel some modules (e.g. DIAMOND BLAST) may still use multiple cores.

The maximum number of CPUs that any single module can use is defined with the

--max_cpusoption (default: 4).You can also set

--max_memory(default: 16GB)--max_time(default: 240h).--max_timerefers to the maximum time each process is allowed to run, not the execution time for the the entire pipeline.

Databases

Autometa uses the following NCBI databases throughout its pipeline:

- Non-redundant nr database

- prot.accession2taxid.gz

- nodes.dmp, names.dmp and merged.dmp - Found within

If you are running autometa for the first time you’ll have to download these databases.

You may use autometa-update-databases --update-ncbi. This will download the databases to the default path. You can check

the default paths using autometa-config --print. If you need to change the default download directory you can use

autometa-config --section databases --option ncbi --value <path/to/new/ncbi_database_directory>.

See autometa-update-databases -h and autometa-config -h for full list of options.

In your nf-params.json file you also need to specify the directory where the different databases are present.

Make sure that the directory path contains the following databases:

Diamond formatted nr file => nr.dmnd

Extracted files from tarball taxdump.tar.gz

prot.accession2taxid.gz

{

"single_db_dir" = "$HOME/Autometa/autometa/databases/ncbi"

}

Note

Find the above section of code in nf-params.json and update this path to the folder

with all of the downloaded/formatted NCBI databases.

CPUs, Memory, Disk

Note

Like nf-core pipelines, we have set some automatic defaults for Autometa’s processes. These are dynamic and each process will try a second attempt using more resources if the first fails due to resources. Resources are always capped by the parameters (show with defaults):

--max_cpus = 2--max_memory = 6.GB--max_time = 48.h

The best practice to change the resources is to create a new config file and point to it at runtime by adding the

flag -c path/to/custom/file.config

For example, to give all resource-intensive (i.e. having label process_high) jobs additional memory and cpus, create a file called process_high_mem.config and insert

process {

withLabel:process_high {

memory = 200.GB

cpus = 32

}

}

Then your command to run the pipeline (assuming you’ve already run nf-core launch KwanLab/Autometa which created

a nf-params.json file) would look something like:

nextflow run KwanLab/Autometa -params-file nf-params.json -c process_high_mem.config

Caution

If you are restarting from a previous run, DO NOT FORGET to also add the -resume flag to the nextflow run command.

Notice only 1 hyphen is used with the -resume nextflow parameter!

For additional information and examples see Tuning workflow resources

Additional Autometa parameters

Up to date descriptions and default values of Autometa’s nextflow parameters can be viewed using the following command:

nextflow run KwanLab/Autometa -r main --help

You can also adjust other pipeline parameters that ultimately control how binning is performed.

params.length_cutoff : Smallest contig you want binned (default is 3000bp)

params.kmer_size : kmer size to use

params.norm_method : Which kmer frequency normalization method to use. See

Advanced Usage section for details

params.pca_dimensions : Number of dimensions of which to reduce the initial k-mer frequencies

matrix (default is 50). See Advanced Usage section for details

params.embedding_method : Choices are sksne, bhsne, umap, densmap, trimap

(default is bhsne) See Advanced Usage section for details

params.embedding_dimensions : Final dimensions of the kmer frequencies matrix (default is 2).

See Advanced Usage section for details

params.kingdom : Bin contigs belonging to this kingdom. Choices are bacteria and archaea

(default is bacteria).

params.clustering_method : Cluster contigs using which clustering method. Choices are “dbscan” and “hdbscan”

(default is “dbscan”). See Advanced Usage section for details

params.binning_starting_rank : Which taxonomic rank to start the binning from. Choices are superkingdom, phylum,

class, order, family, genus, species (default is superkingdom). See Advanced Usage section for details

params.classification_method : Which clustering method to use for unclustered recruitment step.

Choices are decision_tree and random_forest (default is decision_tree). See Advanced Usage section for details

params.completeness : Minimum completeness needed to keep a cluster (default is at least 20% complete, e.g. 20).

See Advanced Usage section for details

params.purity : Minimum purity needed to keep a cluster (default is at least 95% pure, e.g. 95).

See Advanced Usage section for details

params.cov_stddev_limit : Which clusters to keep depending on the coverage std.dev (default is 25%, e.g. 25).

See Advanced Usage section for details

params.gc_stddev_limit : Which clusters to keep depending on the GC% std.dev (default is 5%, e.g. 5).

See Advanced Usage section for details

Customizing Autometa’s Scripts

In case you want to tweak some of the scripts, run on your own scheduling system or modify the pipeline you can clone the repository and then run nextflow directly from the scripts as below:

# Clone the autometa repository into current directory

git clone git@github.com:KwanLab/Autometa.git

# Modify some code

# e.g. one of the local modules

code $HOME/Autometa/modules/local/align_reads.nf

# Generate nf-params.json file using nf-core

nf-core launch $HOME/Autometa

# Then run nextflow

nextflow run $HOME/Autometa -params-file nf-params.json -profile slurm

Note

If you only have a few metagenomes to process and you would like to customize Autometa’s behavior, it may be easier to first try customization of the 🐚 Bash Workflow 🐚

Useful options

-c : In case you have configured nextflow with your executor (see Configuring your process executor)

and have made other modifications on how to run nextflow using your nexflow.config file, you can specify that file

using the -c flag

To see all of the command line options available you can refer to nexflow CLI documentation

Resuming the workflow

One of the most powerful features of nextflow is resuming the workflow from the last completed process. If your pipeline

was interrupted for some reason you can resume it from the last completed process using the resume flag (-resume).

Eg, nextflow run KwanLab/Autometa -params-file nf-params.json -c my_other_parameters.config -resume

Execution Report

After running nextflow you can see the execution statistics of your autometa run, including the time taken, CPUs used,

RAM used, etc separately for each process. Nextflow will generate summary, timeline and trace reports automatically for

you in the ${params.outdir}/trace directory. You can read more about this in the

nextflow docs on execution reports.

Visualizing the Workflow

You can visualize the entire workflow ie. create the directed acyclic graph (DAG) of processes from the written DOT file. First install

Graphviz (conda install -c anaconda graphviz) then do dot -Tpng < pipeline_info/autometa-dot > autometa-dag.png to get the

in the png format.

Configuring your process executor

For nextflow to run the Autometa pipeline through a job scheduler you will need to update the respective profile

section in nextflow’s config file. Each profile may be configured with any available scheduler as noted in the

nextflow executors docs. By default nextflow will use your

local computer as the ‘executor’. The next section briefly walks through nextflow executor configuration to run

with the slurm job scheduler.

We have prepared a template for nextflow.config which you can access from the KwanLab/Autometa GitHub repository using this

nextflow.config template. Go ahead

and copy this file to your desired location and open it in your favorite text editor (eg. Vim, nano, VSCode, etc).

SLURM

This allows you to run the pipeline using the SLURM resource manager. To do this you’ll first needed to identify the

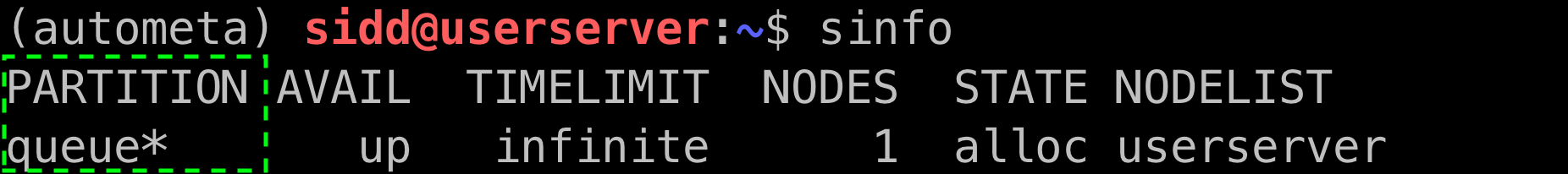

slurm partition to use. You can find the available slurm partitions by running sinfo. Example: On running sinfo

on our cluster we get the following:

The slurm partition available on our cluster is queue. You’ll need to update this in nextflow.config.

profiles {

// Find this section of code in nextflow.config

slurm {

process.executor = "slurm"

// NOTE: You can determine your slurm partition (e.g. process.queue) with the `sinfo` command

// Set SLURM partition with queue directive.

process.queue = "queue" // <<-- change this to whatever your partition is called

// queue is the slurm partition to use in our case

docker.enabled = true

docker.userEmulation = true

singularity.enabled = false

podman.enabled = false

shifter.enabled = false

charliecloud.enabled = false

executor {

queueSize = 8

}

}

}

More parameters that are available for the slurm executor are listed in the nextflow executor docs for slurm.

Docker image selection

Especially when developing new features it may be necessary to run the pipeline with a custom docker image.

Create a new image by navigating to the top Autometa directory and running make image. This will create a new

Autometa Docker image, tagged with the name of the current Git branch.

To use this tagged version (or any other Autometa image tag) add the argument --autometa_image tag_name to the nextflow run command